Evaluation Concepts

The distinction between evaluation and research

We reference here what Kirk Knestis, CEO of Hezel Associates, refers to as the “NSF Conundrum,” the confusion among stakeholders about the role of a PI in research compared to that of evaluation of a program. He writes in the AEA365 blog (August 14, 2014): “The most constructive distinction here is between (a) studying the innovation of interest, and (b) studying the implementation and impact of the activities required for that inquiry. For this conversation, call the former ‘research’ (following NSF’s lead) and the latter ‘evaluation’- or more particularly, ‘program evaluation.’ For clarity between these distinctions and a framework for your project, review the Common Guidelines for Education Research and Development developed by the NSF and US ED Institute of Education Sciences (IES). To read Kirk’s original blog, visit AEA365 Tip-a-Day.

The following section is adapted from Assessing Campus Diversity Initiatives [see References, Garcia, et al.] and the User-Friendly Handbook for Project Evaluation [see References, NSF].

First of all, not everyone enjoys the thought of conducting program evaluations. It’s important to consider why evaluation is useful and how it can contribute to your REU program.

“Evaluation is not separate from, or added to, a project, but rather is part of it from the beginning”

p. 3, NSF User-Friendly Handbook for Project Evaluation.

Reasons for Evaluation:

- Produces useful knowledge

- Documents and clarifies useful work

- Addresses concerns identified by the CISE REU community

- Contributes to shaping institutional policy

- Allows for immediate corrections based on findings

- Provides context

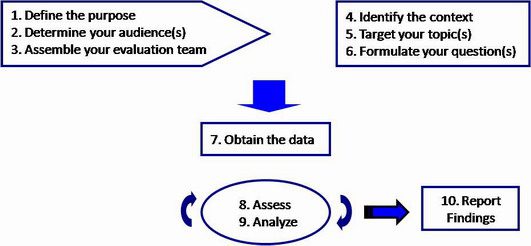

Steps of Evaluation

- Define the purpose

Consider why you are conducting an evaluation of your REU site.

- Is it to measure program improvement?

- Is it to monitor progress of the program and/or the individual students?

- Be accountable for student success and/or the external funding agency?

- Are you responding to an institutional mandate?

For most REU sites, each of these questions define the evaluation purpose. Design a conceptual model to identify key evaluation points. Refer to Logic Models and Recommended Indicators.

- Determine your audience(s)

Clarify your primary audience(s) for the evaluation. In other words, determine who your constituents are, or stakeholders. Common stakeholders are:

- External funding agency (NSF)

- Your department and institution

- The CISE REU community

- The larger academic community.

The language you use to inform stakeholders will be dependent upon what information is crucial to that group. Not all stakeholders value the same information, nor do they require all information. For example, generalized demographic information of participants is relevant for all stakeholders, whereas specific participant names are considered confidential outside of your institutional records and those reported directly to the NSF. See Dissemination.

- Assemble your evaluation team

Because the CISE REU funding does not incorporate evaluation into the budget, most REU sites will find the price of an external evaluator to be cost inhibitive. The NSF suggests as a guideline to dedicate between 5-10% of project cost to evaluation. Internal evaluation, conducted by an individual or individuals within your institution, is likely the most feasible option for the evaluation of your REU site. Refer to Who Should Do Evaluation for some suggested approaches, along with benefits and considerations.

- Identify the context

Determine what your educational mission is, from the perspectives of the REU site, the stakeholders, and academic climate (both national and institutional). What is remarkable and unique about your REU site will depend upon these contextual factors. For example, a new REU site will have start up procedures and gain a great deal from formative evaluation during the first year of implementation. An extension site will be able to consider longitudinal follow up with past REU participants. Your region, student population, and institutional resources will be factors of your evaluation and program success. Refer to Evaluation Models.

- Target your topic(s)

Establish what information you want to glean from the REU site. You may want to focus on one or more of the following:

- Site administration and program satisfaction

- Individual student development

- Research and academic skills

- Professional development skills

- Self-efficacy

- Intent to go to graduate school

- Retention in CISE

- Research initiatives and outcomes

Refer to the Recommended Indicators